Physical Intelligence and What LLMs Still Can’t Do

"World AI" and where we are at today.

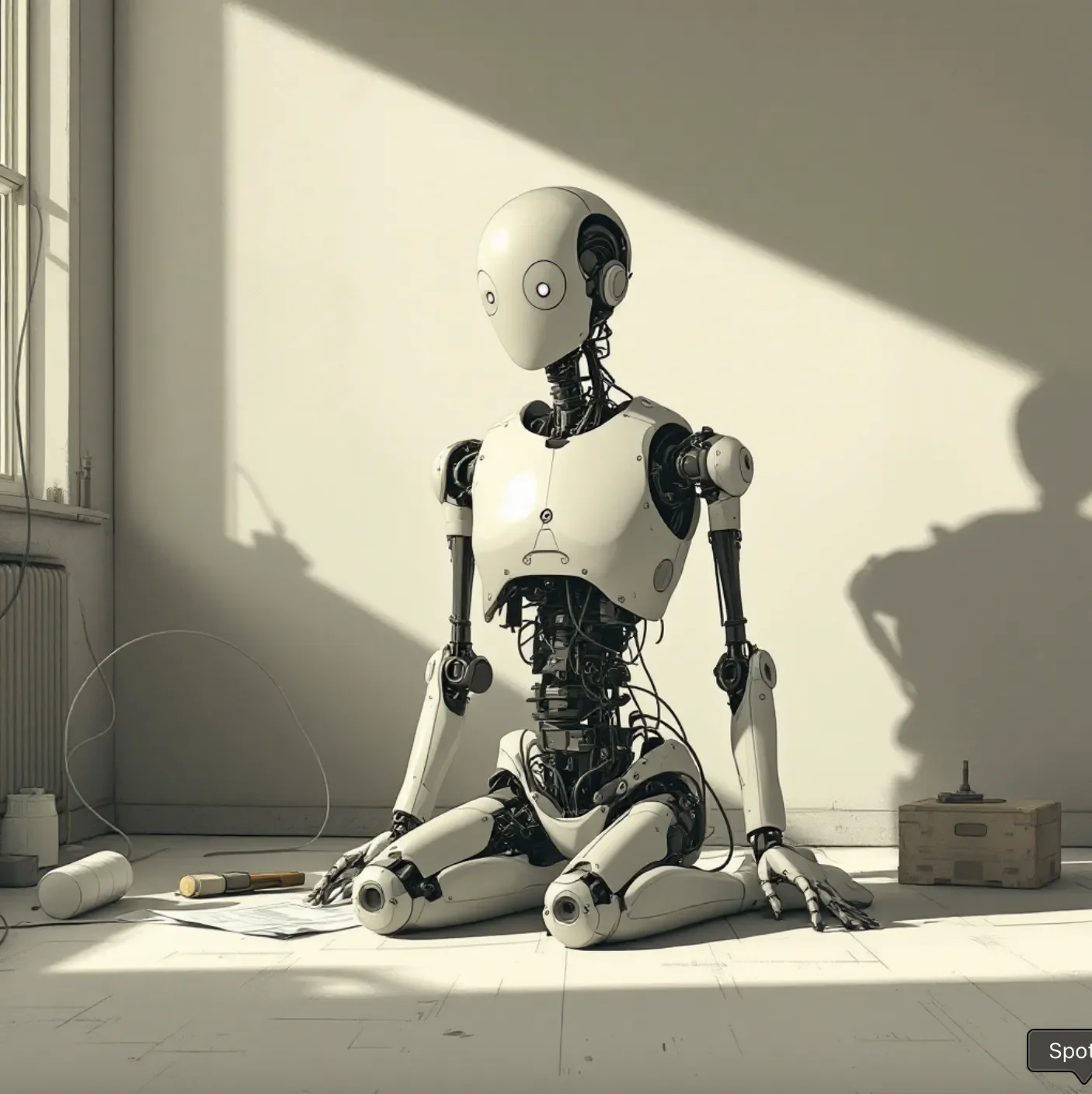

I’ve spent the past few years watching AI evolve at extraordinary speed, yet the conversation this week pulled me back to a simple question: what does intelligence look like outside a screen? LLMs can write, plan, analyse, and act across digital systems with reliability. They operate in environments designed for clear inputs and predictable outcomes. That is where today’s breakthroughs shine.

Everything changes once we move into the physical world. The capability of sensors today still misread light or texture for example. So in these environments, the capability gap becomes obvious. Yes, progress is there, but nothing close to the leap we’ve seen in language.

Two Paths That Do Not Grow at the Same Speed

We often talk about language models, agents, robotics, and autonomy as if they sit on one timeline. They don’t. They are developing along two very different paths.

LLMs scale because language is abundant and structured. The internet gave them a lifetime of examples. Software also follows predictable rules. A workflow triggered in London behaves the same way in Manila.

The physical world does not offer this foundation because environments change and objects vary. A robot has to sense and respond to all of this in real time. However, even the tiniest variations cause errors. This is why robotics demonstrations happen in controlled rooms.

A Practical Way to Think About Physical AI

To keep expectations realistic, here is a simple lens that helps cut through the noise.

1. Where does it work? - If it depends on a controlled environment, it is narrow.

2. How much autonomy is there? - Frequent human intervention means it has not generalised.

3. How stable is it under variation? - Lighting, angles, or texture changes should not break behaviour.

4. How does it learn? - Physical learning is slow and expensive. Simulation alone is not enough.

5. What does the hardware allow? - Sensors, actuators, and materials set hard limits.

6. Do scaling claims make sense? - LLM-style scaling does not apply. Robots cannot gather experience at internet scale.

This framework helps builders and teams stay grounded in what is actually possible today.

What Are We Really Trying to Build?

Before discussing timelines or breakthroughs, it helps to be clear about the goal. Do we want human-level robots? Specialised machines for narrow tasks? Safe assistants in controlled settings? Or something else entirely?

The answer shapes the expectation. Without clarity, it is easy to project the speed of software onto a field that works under different constraints.

The Bottom Line

Software intelligence is accelerating quickly. Physical intelligence remains an open research challenge. This is a reminder that different forms of intelligence evolve under different rules.

Right now, the real opportunity sits in the digital world. This is where AI acts reliably. This is where builders can create value today. And this is where levels of agency already exists.

All the zest,

Cien

Resources

https://pressroom.toyota.com/ai-powered-robot-by-boston-dynamics-and-toyota-research-institute-takes-a-key-step-towards-general-purpose-humanoids/